Catharsis and Other Creative Explorations with JavaScript

At NYU, I explored JavaScript to craft interactive experiences and experimented with API integration to develop my own take on generative AI. This journey blended creativity with technology, pushing the boundaries of digital interaction and AI-driven art.

Tools

p5.js, Visual Studio Code, Stable Diffusion, replica.ai, JavaScript, HTML, CSS

Concept

Interaction Design/AI Integration

Project 1

*

Project 1 *

Design Brief: To create a program that renders images based on speech prompts that visualises a person’s emotional state based on their verbal catharsis.

Reasoning

I have often pondered upon the fact that we mostly use AI programs as a means to an end. In my time experimenting with Dall.E, ChatGPT, Midjourney, etc, I have realised that every time I reached for these tools, it was always for a secondary purpose of either solving a problem or research or adding supplementary material to a project, etc. I have never used a tool simply as an end in and of itself. Even if I had used the above tools as an end at any point, it was mostly so for leisure or experimentation but never for a serious cause and I wanted to see if I could build a program like that using AI.

Most of my inspiration came from the in-class meditation exercises that were conducted. As I sat and watched my thoughts, I realised how detrimental and pessimistic my inner monologue could be at times and I wondered if people have had similar experiences. While thinking, I felt a need to combat it and build a program that was cathartic, where people could vent out their negative perceptions only to be confronted with something beautiful, it was intended to be that way to cause a break in our negative feedback loop. One negative thought leads to another which leads to another and it keeps going until it is intercepted. This tool is meant to serve as an interception.

Technical Journey

I wanted to integrate the API for an existing Prompt to Image program to use as a base for what I was trying to achieve.

For this reason, I used Replicate’s Stable Diffusion and integrated the API into my p5.js sketch. I used P5 as my medium because I wanted to access its speech library.

I added functions to render the render the image within the function where I was calling forth Stable Diffusion.

I created a separate variable for Speech Recognition.

UI trials: At first I used WebGL to create certain wave patterns on the canvas for an underwater effect but that did not work since it was being rendered on top of the SD image. As we learnt more about Spatial, I wanted to utilise threejs by adding a panoramic image as a background. While that worked, I ran into the same layering issue. Ultimately I had to get rid of all the additional elements and simplify the program.

Getting rid of negative words: I created the following For loop:

let words = [“positive”, “negative”, “awesome”, “bad”, “good”]; function setup() { createCanvas(400, 200); // Print the original array console.log(“Original words array:”, words); // Call a function to remove negative words removeNegativeWords(); // Print the modified array console.log(“Words array after removing negative words:”, words); } function removeNegativeWords() { for (let i = words.length – 1; i >= 0; i–) { let word = words[i]; // Check if the word is negative and remove it if (isNegative(word)) { words.splice(i, 1); } } } function isNegative(word) { // Define a list of negative words (you can customize this list) let negativeWords = [“negative”, “bad”, “wrong”, “unpleasant”]; // Check if the given word is in the negative words list return negativeWords.includes(word); }

Why Speech?

I wanted to incorporate Speech as the main mode of input because I believe that it is easier to vent in a conversational setting, as if talking to a therapist. Besides, in my time testing, I realised that speaking prompts make them a little less robotic and a lot more humane which also adds to the factor of not using this tool as a means to an end but as an end itself for the sake of mental release.

Demonstration

Github link: https://github.com/RannaAdhikari5/Shared_Minds_Final_copy_2023_12_13_17_45_48

P5.js link: https://editor.p5js.org/ranna.adhikari58/full/7yBsCYp7S

sketch: https://editor.p5js.org/ranna.adhikari58/sketches/7yBsCYp7S

How to use: Refresh every time for speech recognition and click reveal once the speech has been recorded into prompt box. You can also type prompts if speech is inconvenient.

Links

Future Scope and Troubleshooting:

Renders in 3D space.

Record Button.

Dall.E or Midjourney API

Restricting results to watercolour or surrealist/dreamy art styles.

I was initially writing all the code in p5.js but when I imported all the code in Visual Studio, I went through the code breakdown and realised that potentially hardcoding image containers in HTML could have caused an issue with how the elements were layering on canvas. I shall be rebuilding the UI as a next step.

Project 2

*

Project 2 *

Design Brief: To create an interactive meditation experience on p5.js.

Reasoning

During my time at NYU, I spent quite some time exploring JavaScript specifically the p5.js library in order to get creative and make art using code.

One such project involved exploring mindfulness by creating an interactive meditation experience on p5.js which lets users meditate on the Seven Chakras.

I took a more cultural approach for inspiration where I chose to depict ancient meditation and healing practices from the Indian culture.

It is believed that every human has seven energy centres in the body which are responsible for various aspects of life. When one wants to heal a certain area, balancing the Chakra relating to that area can be really helpful. This can be done through meditation and the media can involve, sound, light, concentration, visualisation or anything that is calming.

I resonate with sound and focus so that is the approach I went for with this assignment.

Technical Journey

For the outcome, I chose to experiment with 3 elements:

Motion

Graphic

Sound

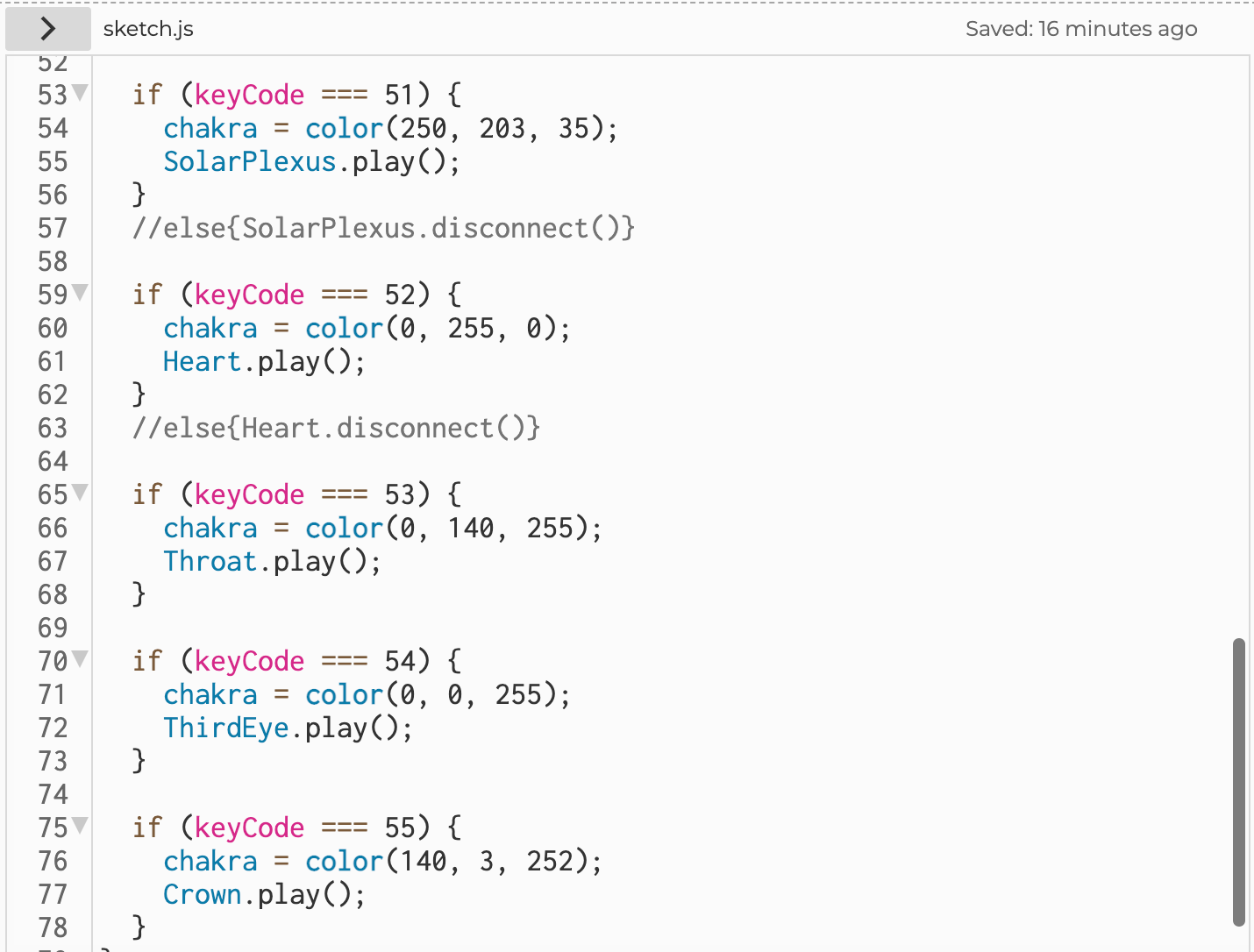

For motion and sound, I utilised simple logic-based code to rotate the object as well as to switch on the sound with key presses. I used audio samples from the YouTube channel called Healing Vibrations since I believe they have some of the best music for stimulating the senses. When it comes to more graphic-based code, I ventured into the WebGL library.

The outcome is supposed to be calming and meant to induce focus among the viewer.

I also wanted to play a bit with the keyPressed() function considering I did many assignments with mousePressed() so I wanted to switch things up. KeyPressed() also gives the user more agency to choose which Chakra they want to focus on.

Demonstration

Give it a whirl: https://editor.p5js.org/ranna.adhikari58/sketches/gt0rr2FXs

Links

Challenges and Takeaways:

The main challenge that I faced was with sound because it was initially breaking up and jarring but maybe it had to do with the WebGL elements in motion which take up a lot of processing power.

I eventually found a way to troubleshoot this.

Overall this was an interesting project that led me to explore more libraries and study different ways of writing code.

Project 3

*

Project 3 *

Design Brief: To explore patterns and getting creative with their interpretation using JavaScript.

Reasoning

When I think of patterns, I’m always taken back to the very first place where I learnt to see patterns as a child: The Sky!

During the day, I have tried to look for relatable figures in clouds and during the night, I have looked for constellations in the Stars.

The latter stuck with me till present day, I have always been intrigued by both, Astronomy and Astrology which leads me to looking up at the sky more during the night than the road up ahead. I am constantly looking for Planets which I can identify or constellations with a distinct pattern.

Many Constellations are named after objects which have been identified in our daily lives and it takes only the tiniest bit of resemblance for our minds to be able to comprehend a certain pattern as those objects which we are familiar with.

If you look closely, it’s kinda bizzare how some people even managed to see certain objects in those patterns! In my opinion, the Constellation of Leo looks nothing like a Lion to the naked eye!

Being able to see patterns where there are none in the sky is a fun thing to do and I wanted to recreate that experience using P5.js

Technical Journey

In terms of technicality, this happens to be a very simple project and the outcome of it intends to tap into the joy of interpretation rather than showcasing something specific.

I created a canvas mimicking what we see in the night sky. This involves creating a Constellation Generator which allocates tiny ellipses at random locations on the canvas to create vague patterns that turn out different each time. You might comprehend some as an object that you encounter in your daily life.

I used the Random function to make this pattern happen.

Demonstration

Count your stars: https://editor.p5js.org/ranna.adhikari58/sketches/jfJnUivnE

It would be interesting to see what kind of patterns people find in this work!

The intention of this piece is to let patterns happen organically and to let people visualise what they want to.